Cohesive VNS3 6.0 Beta3 is now available and free to use in AWS, Azure, and upon request. Get WireGuard(r) performance and encryption along with OIDC authentication, dynamic routing, global deployment capability, and integration to cloud and datacenter connectivity solutions today.

How to Replace your NAT Gateway with VNS3 NATe: Part II

In part I of our NATe post we discussed economic advantages to replacing your AWS NAT Gateways with Cohesive Networks VNS3 NATe devices. We also walked through how to deploy them. In this follow up post I want to dig in a little into the some of the incredibly useful capabilities that VNS3 NATe provides.

In part I we discussed how to replace your current NAT Gateway. One of the steps in that process was to repoint any VPC route table rules from the NAT gateway to the Elastic Network Interface (ENI) of the Elastic IP (EIP) that we moved to the VNS3 NATe ec2 instance. This was done for consistency so that if you had any rules in place that referenced that IP, you would remain intact. Unfortunately, due to the mechanics of AWS NAT Gateway, you can not reassign its EIP while it is running. So we had to delete it in order to free up the EIP. This part of the operation introduced 15 to 30 seconds of down time. With VNS3 NATe devices you can easily reassign an EIP without the need to delete an instance. Our upgrade path is to launch a new instance and move the EIP to it. Since we set our VPC route table rules to point to the ENI of the EIP, our routes will follow.

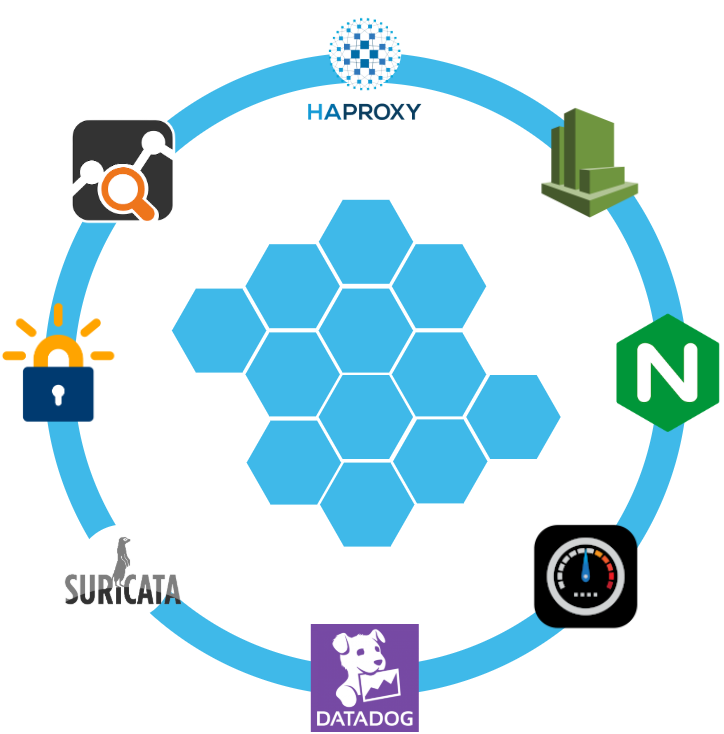

Another powerful feature of VNS3 NATe is the container plugin system. All VNS3 devices have a plugin system based around the docker subsystem. This allows our users to inject any open source, commercial or proprietary software into the network. Coupled with the VNS3 comprehensive firewall section, this becomes a versatile and powerful feature. In the case of a NAT device, there are some serious security concerns that can be addressed by leveraging this plugin system.

Suricata, the Network Intrusion Detection and Prevention System (IDS/IPS) developed by the Open Information Security Foundation (OISF) for the United States Department of Homeland Security (USDHS), is a powerful addition to a VNS3 NATe device. Modern day hacking is all about exfiltration of data. That data has to exit your network and the device that is in path is your NAT device. Additionally malware will often attempt to download additional software kits from the internet, this traffic also traverses through your NATe device. In both of the these scenarios Suricata has powerful features to analyze your traffic and identify suspicious activityand files. You can find directions for setting up Suricata on VNS3 here:

https://docs.cohesive.net/docs/network-edge-plugins/nids/

Additionally, Cohesive Networks has developed a Brightcloud Category Based Web Filtering Plugin. Brightcloud have created a distinct list of categories that all domain names have been divided into. The plugin takes advantage of this categorization in three ways:

- An allow list – A comma separated list of categories that are allowed. The presence of the allow_list.txt will block all traffic, only allowed categories will be permitted.

- A deny list – A comma separated list of categories that are denied. The presence of the deny_list.txt will allow all traffic, only the specified categories will be forbidden.

- An exclusion list – A comma separated list of URLs that are not to be considered by the plugin.

These are just a few examples of software plugins that can run on VNS3 NATe. Others that will work are Snort, Bro (Zeek), Security Onion. Our Sales and Support teams are always happy to assist you in determining the right approach and assisting you with your implementation.

How to Replace Your NAT Gateway with VNS3 NATe

“Network address translation (NAT) is a method of mapping an IP address space into another by modifying network address information in the IP header of packets while they are in transit across a traffic routing device. The technique was originally used to avoid the need to assign a new address to every host when a network was moved, or when the upstream Internet service provider was replaced, but could not route the networks address space. It has become a popular and essential tool in conserving global address space in the face of IPv4 address exhaustion. One Internet-routable IP address of a NAT gateway can be used for an entire private network.”

Cohesive Networks introduced the NATe offering into our VNS3 lineup of network devices back in March. It lowers operational costs while adding functionality and increasing visibility. Easily deployable and managed, it should be a no brainer once you consider its functional gains and lower spend rate. Some of our large customers have already started the migration and are seeing savings in the tens, hundreds and thousands of thousands of dollars.

The AWS NAT Gateways provide bare bones functionality at a premium cost. They simply provide a drop in NAT function on a per availability zone basis within your VPC, nothing more.No visibility, no egress controls, and lots of hidden costs. You get charged between $0.045 and $0.093 an hour depending on the region. You get charged the same per gigabyte of data that they ‘process’, meaning data coming in and going out. That’s it, and it can really add up. A VPC with two availability zones will cost you $788.40 a year before data tax in the least expensive regions, going up to double that in the most expensive regions. Now consider that across tens, hundreds or thousands of VPCs. That’s some real money.

With Cohesive Networks VNS3 NATe you can run the same two availability zones on two t3a.nano instances with 1 year reserved instances as low as $136.66 per a year, with no data tax as ec2 instances do not incur inbound data fees. It is about a sixth to a tenth of the price depending on the region you are running in.

As a Solutions Architect at Cohesive Networks I’ve worked with enterprise customers around the world and understand the difficulty and challenges to change existing architecture and cloud design. Using cloud vender prescribed architecture is not always easy to replace as there are up and down stream dependancies. The really nice thing about swapping your NAT Gateways with VNS3 NATe devices is that it is really a drop in replacement for a service that is so well defined. It can be programatically accomplished to provide near zero downtime replacement. Then you can start to build upon all the new things that VNS3 NATe gives you.

The process of replacement is very straight forward:

- First you deploy a VNS3 NATe for each availability zone that you have in your VPC in a public subnet.

- Configure its security group to allow all traffic from the subnet CIDR ranges of your private subnets.

- You do not need to install a key pair.

- Once launched turn off source / destination checking under instance networking.

- Next you will repoint any VPC route table rules, typically 0.0.0.0/0, from the existing NAT Gateway to the Elastic Network Interface of the Elastic IP that is attached to your NAT Gateway.

- Delete the NAT Gateway so as o free up the Elastic IP.

- Finally, associate the Elastic IP to your VNS3 NATe instance.

The only downtime will be the 30 or so seconds that it takes to delete the NAT Gateway.

One safeguard we always recommend to our customers to set up a Cloud Watch Recovery Alarm on all VNS3 instances. This will protect your AWS instances from any underlining hardware and hypervisor failures. Which will give you effectively the same uptime assurancesas services like NAT Gateway. If the instance “goes away” the alarm will trigger an automatic recovery, including restoring the elastic ip, so that VPC route table rules remain intact as well as state as restoration occurs from run time cache.

Now you can log into your VNS3 NATe device by going to:

https://<elastic ip>:8000

usename: vnscubed

password: <ec2 instance-id>

Head over to the Network Sniffer page from the link on the left had side of the page and set up a trace for your private subnet range to get visibility into your NATe traffic.

Windows Server Failover Clustering on an Encrypted Overlay Network with VNS3

Windows Server Failover Clustering (WSFC) is a powerful technology in the Microsoft arsenal of offerings. Unfortunately, it was designed for data centers under the assumption that it would be running on physical servers with copper wires running between them. Wouldn’t it be nice to be able to bring WSFC capabilities to the public cloud?

From Microsoft’s description, a failover cluster is a group of independent computers that work together to increase the availability and scalability of clustered roles (formerly called clustered applications and services). These clustered servers (called nodes) are connected by physical cables and software. If one or more of the clustered nodes fails, other nodes begin to provide service (a process known as failover). In addition, the clustered roles are proactively monitored to verify that they are working properly. If clustered roles are not working, they are automatically restarted or moved to another node.

Failover clusters also offer Cluster Shared Volume (CSV) functionality, providing a consistent, distributed namespace that clustered roles can use to access shared storage from all clustered nodes. With the Failover Clustering feature users experience a minimum of disruptions in service.

Implementing WSFC in the cloud is difficult because even though we have plenty of software, we lack physical cables. In this example we will use Cohesive Networks VNS3 in order to virtualize the wires necessary to trick WSFC into working.

The configuration documentation for WSFC states that each server needs to run in its own subnet. A /29 works well as you lose the top and bottom addresses for gateway and broadcast, leaving you with 6 available addresses. As we will see, you will assign the first and second low addresses, leaving you with an additional 4 addresses to be used for listeners and or cluster roles.

Our first step is to get our virtual servers to believe that they are in defined subnets even though they are in fact in a flat overlay network. Underneath they can exist in the same VPC subnet or across various VPC/VNETs. This approach allows you to run a cluster across cloud providers and across regions. You can even add members in a data center, just so long as all Windows servers are connected to the same overlay network.

So lets say we have an overlay network of 172.16.8.0/24 and we have carved out three /29s:

172.16.8.56/29

172.16.8.64/29

172.16.8.72/29The above should be done when you import your license, where you can choose custom and define your overlay addresses.

Let’s also assume we have a pair of Active Directory domain controllers assigned 172.16.0.1 and 172.16.0.2.

On each of the cluster windows servers we assign the following VNS3 client packs:

172.16.8.57

172.16.8.65

172.16.8.73dhcp-option DNS 172.16.8.1

dhcp-option DNS 172.16.8.2

script-security 2

route-up "C:\\routes.bat"windows-driver wintunFurther details can be found here:

https://cohesiveprod.wpenginepowered.com/blog/the-new-openvpn-2_5/

Make sure to install the Cohesive Networks Routing Agent which can be found here:

https://cn-dnld.s3.amazonaws.com/cohesive-ra-1.1.1_x86_64.exe

In Windows Network Connections change the name of your Wintun Userspace Tunnel to ‘Overlay’ so it can be referenced as below.

We will create C:\routes.bat as follows:

@echo off

set cidr=172.16.8

set /A a=56

set /A b=%a% + 1

set /A c=%b% + 1

netsh interface ipv4 set address "Overlay" static %cidr%.%b% 255.255.255.248

timeout 1

netsh interface ipv4 add route %cidr%.0/24 "Overlay"timeout 1

C:\Windows\SysWOW64\WindowsPowerShell\v1.0\powershell.exe -command "& {Get-NetAdapter -Name Overlay | select -ExpandProperty ifIndex -first 1 }" > tmpFile

set /p INDEX= < tmpFile

del tmpFile

timeout 1

C:\Windows\SysWOW64\WindowsPowerShell\v1.0\powershell.exe -command New-NetIPAddress -InterfaceIndex %INDEX% -AddressFamily IPv4 -IPAddress %cidr%.%c% -PrefixLength 29 -DefaultGateway %cidr%.%a%

@echo offNow when you bring up OpenVPN you will see:

At this point we’ve accomplished two very important tasks. First, we have changed the default subnet for our assigned overlay address from a /24 to a /29, which conforms to WSFC’s configuration rule necessitating that all clustered servers be in their own subnet. But since we’re using openVPN here we have an issue: we do not have a default gateway and WSFC insists that we have one. So we can add the next address in our /29 to our virtual interface and set a default gateway for it, in this case the first address in our /29. This address is really just to trick WSFC; while the address will answer traffic its purpose is to simply supply an address in our /29 that has a default gateway set.

Our next step is to set up interface routes in VNS3 so that all traffic within the /29 subnets can reach the actual assigned client pack overlay addresses. In the Routes section of VNS3 you will add an interface route for each /29 as follows:

You now have a route that directs anything in the /29 address space ‘through’ the assigned client pack. So in the example of /56, where 172.16.8.57 is the client pack, and we have added 172.16.8.58 to the virtual Wintun interface, we will tell WSFC to add 172.16.8.58 for our cluster address. Our windows server will now respond to all three addresses.

Let’s try it out:

You will need to install the Failover Clustering feature, and you will need to have all windows servers joined to a domain.

In our example I have named my three servers Cluster-1, Cluster-2 and Cluster-3. From any of these three servers, open up a command window and enter powershell mode:

New-Cluster -Name OverlayCluster1 -Node Cluster-1,Cluster-2,Cluster-3 -NoStorage -StaticAddress 172.16.8.59,172.16.8.67,172.16.8.75 -AdministrativeAccessPoint ActiveDirectoryAndDnsYou should see some activity metering and then a success message.

Now you can connect to a cluster in the Failover Cluster Manager and enter a period to denote this server. With clustername.domain selected under “Cluster Core Resources” expand “Name: Clustername.” Right click on the underlay address that should be labeled “Failed” and select “Remove.” Under “Nodes” you should see your three servers. Under “Networks” you will see each of the overlay /29s as well as each of the underlay subnets. For the underlay subnets, under properties, select “Do not allow cluster network communication on this network.” Your cluster is now ready to add roles. Try it out.

Get-Cluster -Name OCluster1 | Remove-Cluster -Force -CleanupAD

Cloud Instance Quality vs. Cloud Platform Cost-at-Scale

What is the failure rate of cloud instances at Amazon, Azure, Google?

I have looked for specific numbers – but so far found just aggregate “nines” for cloud regions or availability zones. So my anecdotal response is “for the most part, a REAL long time”. It is not unusual for us to find customers’ Cohesive network controllers running for years without any downtime. I think the longest we have seen so far is six years of uptime.

So – with generally strong uptimes for instance-based solutions, and solid HA and recovery mechanisms for cloud instances – how much premium should you spend on some of the most basic “cloud platform solutions”?

Currently cloud platforms are charging a significant premium for some very basic services which do not perform that differently, and in some cases I would argue less well than instance-based solutions; either home-grown or 3rd-party vended.

Let’s look at a few AWS examples:

- NAT-Gateway 4.5 cents per hour plus a SIGNIFICANT data tax of 4.5 cents per gigabyte

- Transit Gateway VPC Connection 5 cents per hour for each VPC connection plus a HEALTHY data tax of 2 cents per gigabyte

- AWS Client VPN $36.50 per connected client (on a full-time monthly basis), $72 per month to connect those VPN users to a single subnet! (AWS does calculate your connected client costs at 5 cents per hour, but since we should all basically be on VPNs at all times, how much will this save you?)

NOTE: The items I call “data taxes” are on top of the cloud outbound network charges you pay (still quite hefty on their own).

If you are using cloud at scale, depending on the size of your organization, the costs of these basic services get really big, really fast. At Cohesive we have customer’s that are spending high six figures, and even seven figures in premium on these types of services. The good news is for a number of those customers it is increasingly “were spending”, as they move to equally performant, more observable, instance-based solutions from Cohesive.

Here is a recent blog post from Ryan at Cohesive providing an overview of Cohesive NATe nat-gateway instances versus cloud platforms. For many, a solution like this seem to meet the need.

Although – I think Ryan’s post may have significantly underestimated the impact of data taxes. https://twitter.com/pjktech/status/1372973836539457547

So you say “Yes, instance availability is really good, but what about [fill in your failure scenarios here] ?”

Depending on how small your recovery windows need to be, there are quite a range of HA solutions to choose among. Here are a few examples:

- Protect against fat-finger termination, automation mistakes with auto-scale groups of 1, and termination protection

- Use AWS Cloud Watch and EC2 Auto Recovery to protect against AWS failures

- Run multiple instances and add in a Network Load Balancer for still significant savings

- Use Cohesive HA plugin allowing one VNS3 Controller instance to take over for another (with proper IAMs permissions)

Overall, this question is a “modern” example of the “all-in” vs. “over-the-top” tension I wrote about in 2016 still available on Medium. More simply put now, I think of the choice as being when do you run “on the cloud” and when do you choose to run “in the cloud”, and ideally it is not all or none either way.

In summary, given how darn good the major cloud platforms are at their basic remit of compute, bulk network transport, and object storage, do you need to be “in the cloud” at a significant expense premium, or can you be “on the cloud” for significant savings at scale for a number of basic services?

Follow the Bouncing Ball; Finding Your Packets

One of the great strengths of VNS3 has always been the ease with which you can look at your network traffic, a necessity for troubleshooting connectivity issues or attesting to correct packet flow. With our release of VNS3 5.0 we have added some big functional improvements that make our network sniffer even better.

Where as the network sniffer used to run in a single user, single process mode, you can now run multiple captures in multiple web sessions. This is extremely helpful when multiple people are logged into a controller diagnosing issues together or you want to flip back and forth between two captures running simultaneously. In addition all filtered expressions are now saved so that they can be rerun in the future. You don’t have to reacquaint yourself with filter syntax every time.

Another big improvement is the ability to run a capture across all interfaces. This new functionality allows you to follow a packet, for example, coming off of a IPSec tunnel, up to a container running a proxy load balancer and out to a compute host connected via the encrypted overlay network. This operation would look at three interfaces, eth0, plugin0 and tun0. This is really helpful when observing the full path of your packets.

If you want to take the output of your captures and analyze them in other tools we now provide you the ability to download your captures in pcap format so that you can read them into your preferred network analysis tool or SIEM. There are a number of free online tools like apackets.com or opensource projects like wireshark and tshark that you can use.

One more improvement that we have made is that all captures will automatically terminate after onehour of running. Previously captures would run until you purposefully stopped them. That ran the risk of over logging and some reduction in performance. Much easier to stop them automatically after a reasonable hour than to have to remember to manually do so.

Ultimately a network device is only as good as the visibility it provides. We at Cohesive Networks strive to provide as much insight as possible and the user experience to make it as simple as possible.

VNS3 Feature Spotlight – HTTPS Certificate Upload

Managing HTTPS Certificates in your VNS3 controller has just become easier. At Cohesive we recognise the challenges involved in obtaining and uploading these certificate chains to your device. VNS3 5.0 significantly improves this functionality and lets you manage HTTPS certifications in bulk.

When you launch VNS3 in your cloud environment it installs its own self-signed certificate, this is necessary as SSL certificates cannot be associated to an IP address. Increasingly web browsers are now giving ominous warnings or completely blocking websites that are not HTTPS. Organisations also prefer to access their Cloud assets using their own domains. They can achieve this by uploading their signed certificates to their VNS3 controller.

As part of our VNS3 5.0 release we have overhauled both the user experience and user interface to help our customers easily install and review the certificates on their devices.

The user experience has now been simplified with an intelligent multi-file upload which has been designed to avoid confusion around which certificate file (root, intermediate, end user) needs to be uploaded at each step. Each Certificate Authority tends to provide its own files and formats, we’ve moved to standardise the import process, thus reducing the cognitive load of what can be an onerous process.

The Certificate Upload page has also been updated with a clear and easy to read table that

shows the installed certificates, the issuer, certificate lifespan and the inclusion of a SHA-256 checksum adds to the confidence of the chain of custody.

Our flexible plugin system allows us to simplify this process even further: our curated LetsEncrypt plugin utilises VNS3’s Edge Networking plugin subsystem to:

- Automate the generation and renewal processes of HTTPS certs

- Perform HTTPS challenge verification

- Upload and install certificates via the VNS3 API

You can also utilise our Plugin system to take advantage of The Lets Encrypt plugin. The plugin will automate the process of renewing your certificates at a regular interval.

All you need to utilise the plugins features are:

- A registered domain name for which certificates will be generated. This DNS must resolve to your VNS3 controller’s public IP address

- An email address to be associated with the certificate, usually your webmaster address

- Your VNS3 controller’s plugin subsystem subnet

- Your VNS3 controller’s API password

Check out the full documentation of the LetsEncrypt plugin which can be found here:

https://docs.cohesive.net/docs/network-edge-plugins/lets-encrypt/

Recent Comments